We introduce RoDyGS, a Dynamic Gaussian Splatting pipeline that learns motion and geometry from casual videos using robust regularization. RoDyGS outperforms pose-free dynamic neural fields and achieves competitive results compared to static baselines in rendering quality.

Dynamic view synthesis (DVS) has advanced remarkably in recent years, achieving high-fidelity rendering while reducing computational costs. Despite the progress, optimizing dynamic neural fields from casual videos remains challenging, as these videos do not provide direct 3D information, such as camera trajectories or the underlying scene geometry.

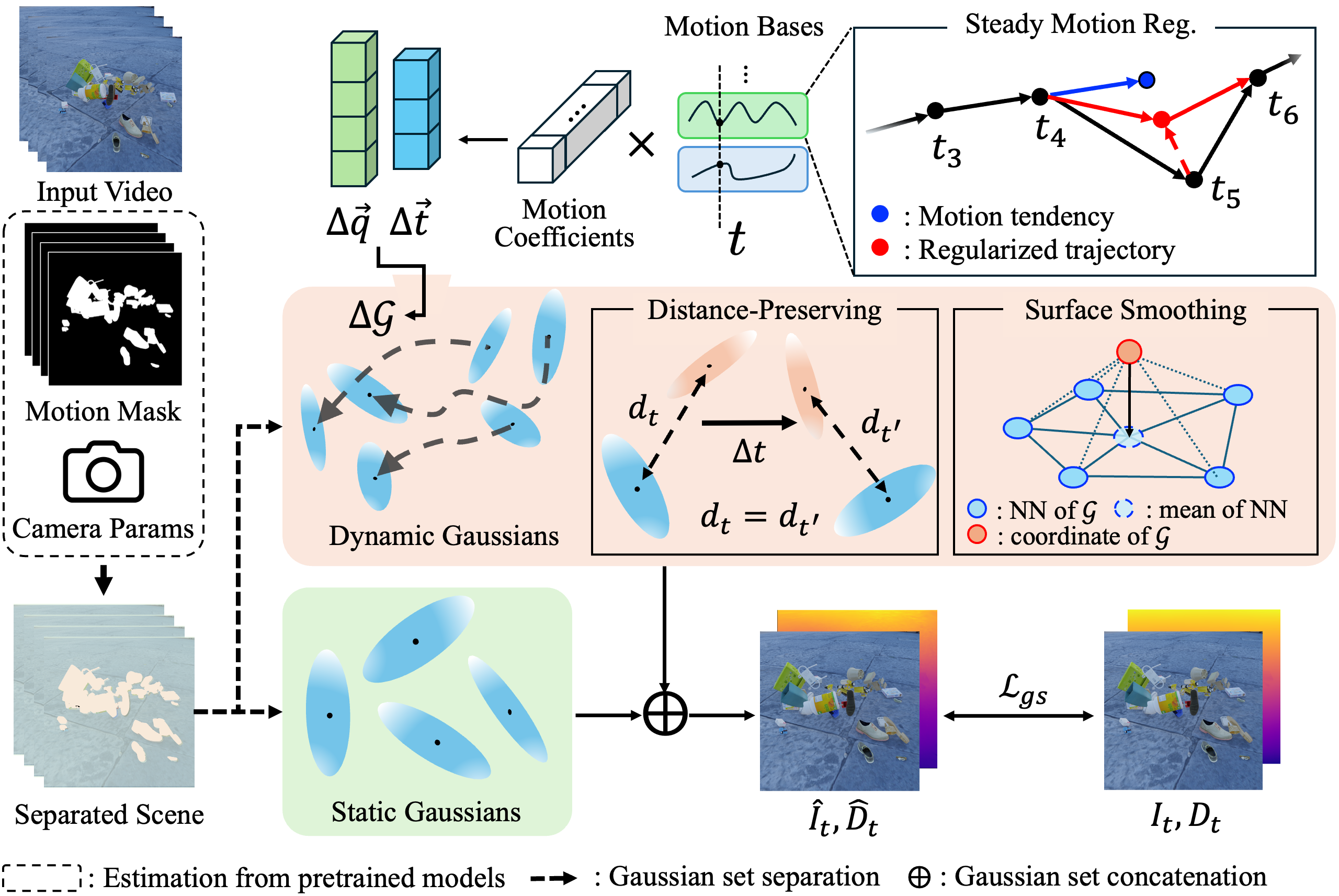

In this work, we present RoDyGS, an optimization pipeline for dynamic Gaussian Splatting from casual videos. It effectively learns motion and underlying geometry of scenes by separating dynamic and static primitives, and ensures that the learned motion and geometry are physically plausible by incorporating motion and geometric regularization terms.

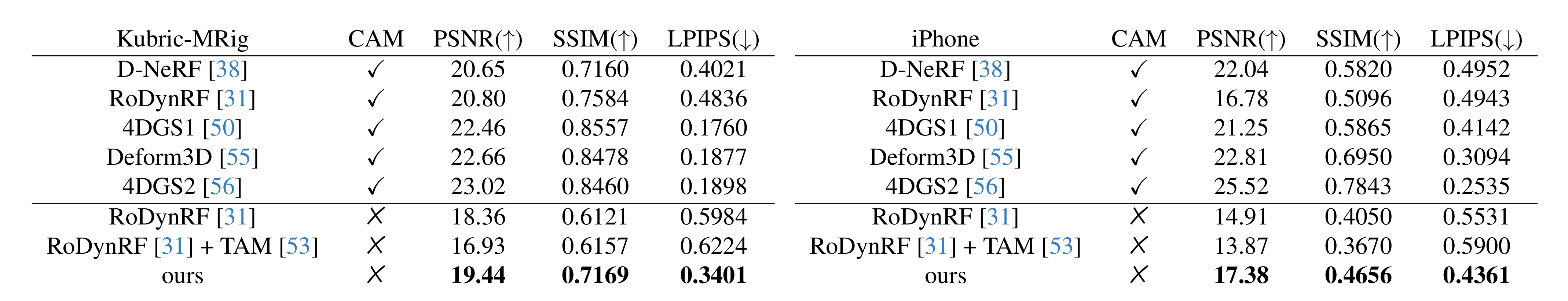

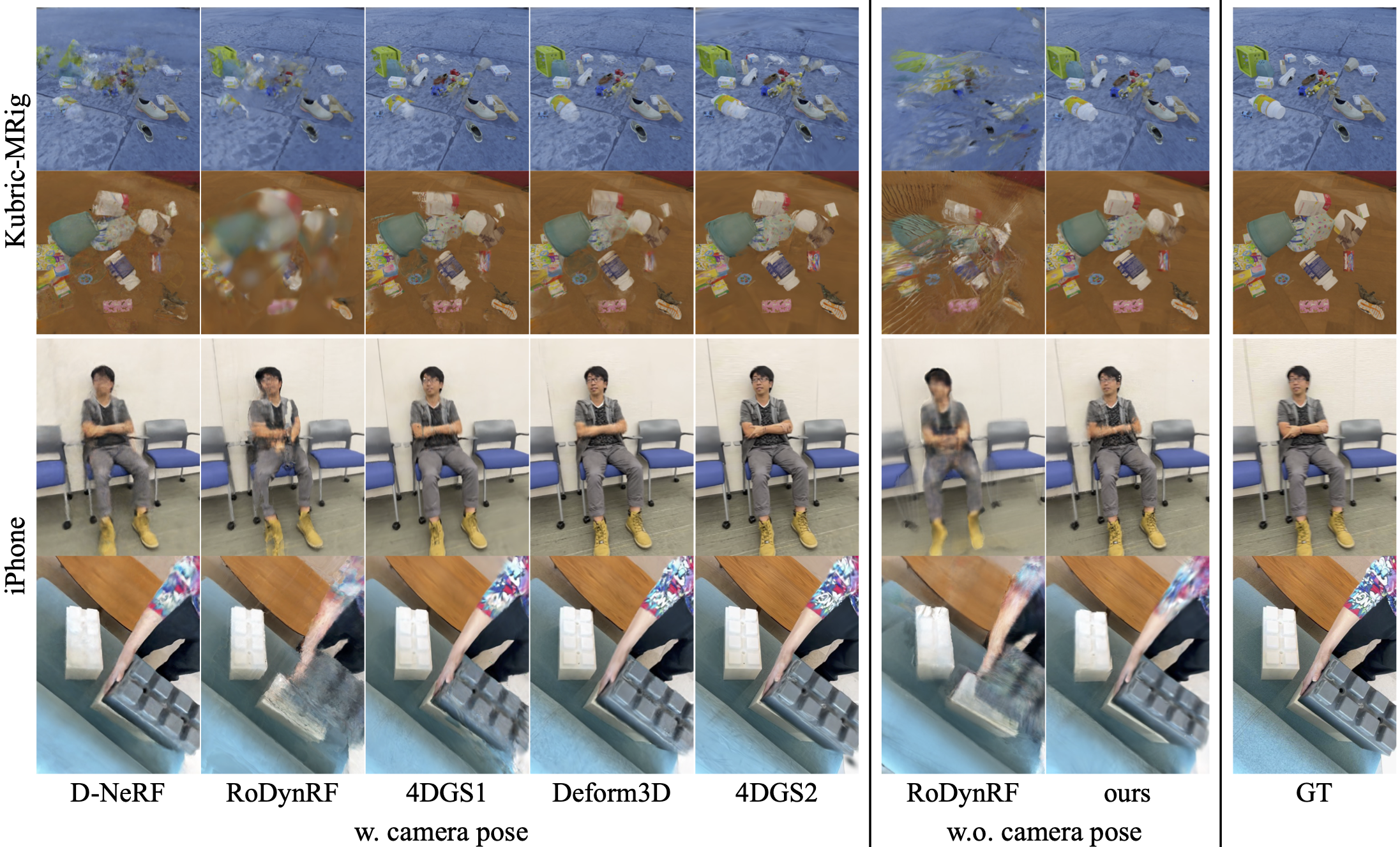

We also introduce a comprehensive benchmark, Kubric-MRig, that provides extensive camera and object motion along with simultaneous multi-view captures, features that are absent in previous benchmarks. Experimental results demonstrate that the proposed method significantly outperforms previous pose-free dynamic neural fields and achieves competitive rendering quality compared to existing pose-free static neural fields.

Starting with a casually captured video input, RoDyGS extracts camera poses and depths using MASt3R, while motion masks are derived from TAM. It then separates static and dynamic Gaussians, enabling each to be independently learned for stationary background and moving objects.

The primary optimization objective, Lgs, includes photometric loss and Pearson depth loss, with depth guidance extracted from images using DepthAnything. Additionally, for dynamic Gaussians, Gaussian distance-preserving regularization (Ltc) and surface smoothness regularization (Ls) are applied. For the motion bases, continuous motion regularization (Lmc) is employed.

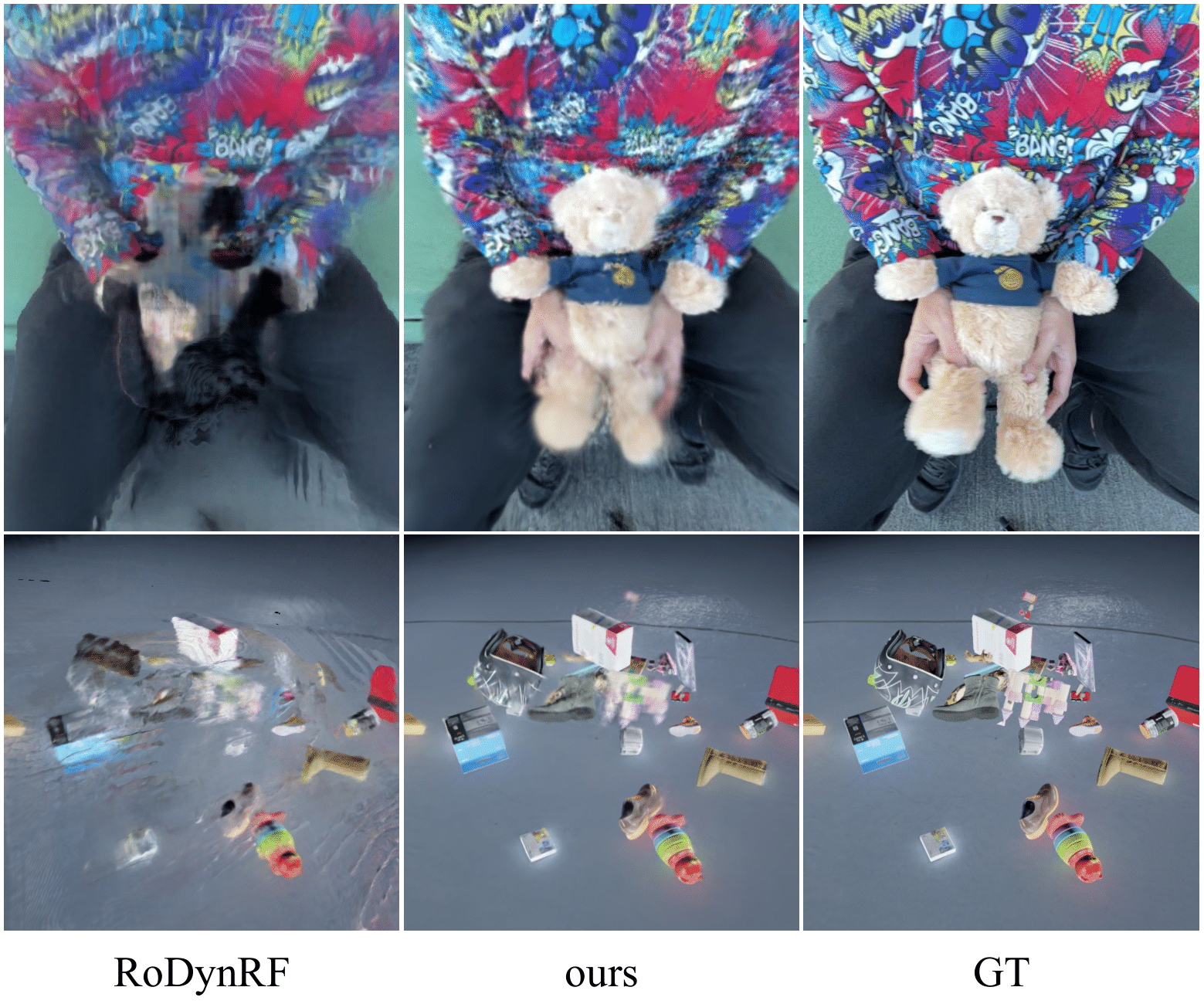

DVS quality on Kubric-MRig and iPhone datasets. CAM(in table) denotes the availability of ground truth camera poses during training. RoDyGS achieves superiority over RoDynRF for novel view rendering quality for both datasets.

@article{jeong2024rodygs,

title={RoDyGS: Robust Dynamic Gaussian Splatting for Casual Videos},

author={Yoonwoo Jeong and Junmyeong Lee and Hoseung Choi and Minsu Cho},

journal={arXiv preprint arXiv:2412.03077},

year={2024}

}